Explainable AI: Why Is XAI Important?

How do you describe a picture? Could you teach a machine to make a description of a picture? Suppose you can; is it important that you know what the machine learned?

Yes, I believe it is important to understand what the machine learned, in order to understand its limitations and assess its applicability. In this post I will justify my claim.

Why do we need explanations?

Is there a similarity in recruiting people and using an intelligent machine? Of course. Would you skip the interview when you know your candidate graduated in X at AI? I would not. Because I want to check what the candidate learned in order to assess what the best position in my organisation is for the person. Same with the intelligent machine — I want to know what it learned …

… to select the best way to use it.

Is each proof a good explanation?

Back to the example. Generating descriptions for pictures may seem a typical pattern-matching task. A neural network can be trained to do the job based on value pairs. The results on the 20% test set seem promising at first glance: 90%+ accuracy.[1] That is good news for visually impaired who have nothing to rely on now.

So, the first impression is good. However, a researcher asks: what did the neural network really learn? The answer to this question places the initial results in a different light. Typically, this kind of system learns what was captioned manually by humans and it will re-use this when presented with scenes similar to what it's seen before. As a result, when an image is presented that is not very similar to anything presented before, the caption that is most frequently used by humans will be chosen by the system (for example, 'an animal in wild nature').

This summer I participated in two scientific conferences. The first one was the workshop on controlled natural languages in Maynooth, Ireland, and the second one was the workshop on rule markup languages, simultaneous with an AI conference in Luxembourg.

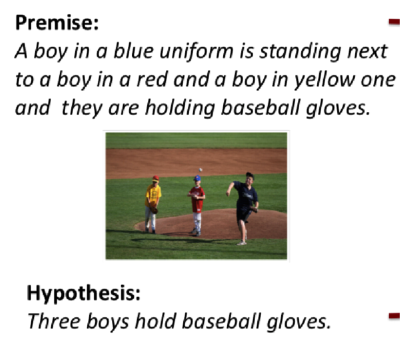

In the first conference, a keynote speech by Albert Gatt from the University of Malta on "Visual and linguistic features for recognising and generating entailments"[2] disclosed that the neural network, with a sophisticated layered algorithm I rarely see in commercial settings, would barely drop in performance when presented with a foil while, if the images really contributed to the task, you'd expect the model to drop in performance. So, the machine learned more about word frequency in the test set than about the real task at hand. We call this 'overfitting' in machine learning terminology.

However, despite many attempts of the smart professor and his team, no deeper knowledge was discovered and a question mark was placed next to the initial promising results. Conclusion:

Accuracy is not enough; understand what the machine really learned.

Despite the remarkable increase in the number of image-description systems in recent years, experimental results suggest that system performance still falls short of human performance.[3]

An explanation may uncover decision biases.

At the second conference, I got more examples from research that checks if the knowledge learned by a neural network reflects deep knowledge, abstraction, and generalisations. The conclusion is that these decision makers are easily fooled and likely to be biased. That does not necessarily need to be a problem: machines may be re-trained and usability may be limited, though fast and practical. However, it should be known and researched.

Do we need better tools?

This area of research has been given a name: 'Explainable AI', using the acronym XAI, and it is a topic dear to my heart. Like the AI methods in general, it's not a new topic. Actually, I graduated in the mid-nineties on exactly this topic. We trained a model using genetic algorithms to predict the results of a crop-breeding selection process and explained the results to the expert user by generating a decision tree from the trained model using C4.5.[4] This method was later implemented in one of the specialised tools that I worked on. However, it does not seem to be a standard feature of the platforms that are popular nowadays, and it is also difficult to make the explanation really 'humanly readable'. Some extra help from the research community is welcome though, in the end, I believe it is more about having the right mindset:

Be creative and curious, always asking why.

Explanations provide more benefits.

Explanations help people trust the outcome of the machine-made decision.

This is very important. Without trust, people will look at the decision as 'advice' and use it as one of the criteria to make their own decisions.

An example is provided by the passenger forecasting process at a large airport. They forecast the number of passengers arriving at an airport based on the flight schedule and patterns in the past. They are using sophisticated machine-learning techniques, but do they include common sense factors like holiday season, media, and weather forecast? In the end, the planners have a button to increase or decrease the forecasted passenger numbers to make a day planning. Is this what you want after all the investment in an algorithm?

It helps to include more common sense knowledge in the decision support systems.

An explanation may quickly reveal that some common sense knowledge is not taken into account.

To accurately forecast the number of passenger on the first day of a holiday season you expect this common knowledge to be part of the explanation. If not, you may want to deviate from the forecast and have a reason to do so.

It prevents making decisions based on biases.

My newspaper reported on a case that illustrates the risk and adversity of trusting algorithms without using explanations. The mortgage of a family with a more-than-average family income was declined. They were very (unpleasantly) surprised and asked for the reason. It turned out that the previous inhabitant on their address had a payment deficit on his mortgage and the algorithm had picked up the address as a 'valid' criterion to decline the mortgage request.

Decisions without explanations are not intelligent.

Ask yourself: what is decision making without inference? Whom would I accept a decision from if they are unable to explain it? Maybe your parents? Anyway, you have to think about that, right? Decision making without inference is what we sometimes call 'spirituality' but that is very far away from the world inhabited by math, logic, and computers. They should never be confused.

To conclude: I claim that all decision support systems should be able to justify their results with an explanation. In this post, I justified this claim, following the advice I give for machines. I hope it convinced you. I would like to receive your feedback and hope that explainable AI systems will result in a relationship between humans and software that lasts longer than the AI hype.

REFERENCES

[1] https://ai.googleblog.com/2016/09/show-and-tell-image-captioning-open.html

A natural question is whether our captioning system can generate novel descriptions of previously unseen contexts and interactions. The system is trained by showing it hundreds of thousands of images that were captioned manually by humans, and it often re-uses human captions when presented with scenes similar to what it's seen before.

[2] Based on work from Albert Gatt, Institute of Linguistics & Language Technology University of Malta:

The task is to classify a picture, premise, and hypothesis correctly as belonging together (called entailment) as in the following picture:

[3] http://homepages.inf.ed.ac.uk/keller/papers/jair16.pdf

Despite the remarkable increase in the number of image-description systems in recent years, experimental results suggest that system performance still falls short of human performance. A similar challenge lies in the automatic evaluation of systems using reference descriptions. The measures and the tools currently in use are not sufficiently highly correlated with human judgments, indicating a need for measures that can deal adequately with the complexity of the image description problem.

[4] https://en.wikipedia.org/wiki/C4.5_algorithm

# # #

About our Contributor:

Online Interactive Training Series

In response to a great many requests, Business Rule Solutions now offers at-a-distance learning options. No travel, no backlogs, no hassles. Same great instructors, but with schedules, content and pricing designed to meet the special needs of busy professionals.

How to Define Business Terms in Plain English: A Primer

How to Use DecisionSpeak™ and Question Charts (Q-Charts™)

Decision Tables - A Primer: How to Use TableSpeak™

Tabulation of Lists in RuleSpeak®: A Primer - Using "The Following" Clause

Business Agility Manifesto

Business Rules Manifesto

Business Motivation Model

Decision Vocabulary

[Download]

[Download]

Semantics of Business Vocabulary and Business Rules