Insights from the Adoption of Fact Modeling

MMG Insurance is located in Presque Isle, Maine. We provide home, auto, and small commercial insurance to over 110,000 policyholders serviced in partnership with 155 independent agents in the states of Maine, New Hampshire, Vermont, Pennsylvania, and Virginia.

MMG adopted fact modeling to provide a solid foundation to our business rules approach. As new entrants to a business rules approach, we initially struggled without engaging in fact modeling. Once we discovered the power of fact modeling, we were able to solidify our analysis approach. This helped us speak a common language, improve corporate memory, and realize additional unexpected benefits.

MMG had a common business rules problem. Our business rules had become buried in legacy platform code. Subject matter experts and architects did not have an easy way to get access to our operational business rules. If operational business rule knowledge was needed, a developer often needed to be pulled in to debug the code. Alternatively, people attempted to interpret operational business rules by observing system behavior. These methods were prone to errors, assumptions, and rule gaps.

MMG arrived at this position by means of a very common development scenario. In our early systems, many rules and processes were not automated and business people needed to understand lots of business rules. Many of these rules and processes were highly repetitive. Many were also complex and prone to human error. There was a lot of efficiency to be gained by automating these rules, and significant development effort was made to automate all common business rules. During this development period, subject matter experts were consulted to understand these rules and the rules were automated into systems. Once the rules were automated and the systems proved to be reliable, subject matter experts had less reason to remember all of the business rules. As time went on, new rules were placed immediately into systems. The systems became the only source of the truth. Subject matter experts lost visibility of the business rules.

Once rule visibility was lost, common business rule issues arose. Rule variance was a common issue with several contributing factors. Different subject matter experts had different understandings of the same business rules and would explain them differently. Different developers interpreted the same rules differently. Multiple platforms sprung up and the same business rules were developed in slightly different manners for different platforms. Rules had different variations for different states, products, platforms, and periods of time, and as the rules became increasingly complex and spread out, even developers had difficulty tracking down and explaining what business rules were doing. Impact analysis became increasingly difficult as different sources pointed to different impacts. Impact analysis often required multiple developers familiar with different platforms to track rules down. Since the analysis was often lengthy and difficult, it was sometimes avoided and some projects never started because understanding what needed to be designed was overly complex. Operational maintenance grew and capacity to deliver strategic initiatives diminished. The corporate memory that was automated to make things easier was lost, and without that corporate memory other developments became harder to accomplish.

MMG continued to be recognized yearly, with both top carrier and technology awards, but with an increasing maintenance cost, coupled with expansion to more states, we recognized that our current model was becoming increasingly burdensome. Therefore, MMG adopted Enterprise Architecture and formal analysis using a rules-based approach to recapture corporate memory, provide the foundation for alignment between the business and Information Systems, and improve our ability to make changes more quickly.

Several core concepts from Enterprise Architecture have been integral in our ability to develop analysis services.

One important recognition was that we needed an Enterprise change, not just an Information Systems change. In order to recapture corporate memory we needed to move understanding of facts, business rules, and business processes back into the hands of the business and improve alignment between business domains and Information Systems.

Another important recognition was that business processes, business rules, and facts are assets that must be managed. Enterprise Architecture provided the basis to recognize these assets as first-class citizens. It also encouraged us to recognize these assets distinctly from any technical implementation, and even when they may not be automated at all.

Third, we recognized a need to better understand and manage ownership of our business processes, business rules, and facts. We had information silos that we needed to move away from. Different groups had different understandings of important core concepts, and we needed to break these silos down and achieve a more enterprise wide understanding of important concepts.

Lastly, an examination of our operating model — or how we integrate and standardize business processes — revealed that we had many facts, rules, and processes we viewed as unique. Our uniqueness was very costly. It hindered our ability to integrate and innovate. We needed to become simpler so that we could improve our ability to integrate, allowing us to combine capabilities in new, innovative ways.

We examined the cost of our concepts. Concepts that did not have a common business domain understanding were very costly. Some concepts were so prone to different understandings that any mention of them inevitably led to long meetings and confusion. We needed at least a common domain understanding of concepts. We knew that we ultimately wanted to achieve a common Enterprise understanding of concepts. We wanted business architects from different business domains to be able to discuss common concepts and have a shared understanding of what the concept was. If we could achieve this level, our cost of these concepts would be greatly reduced. Finally, if concepts aligned with an industry understanding, and ultimately a world understanding, cost of ownership could be reduced even further. These levels would add a lot of value for common business transactions with business partners and for onboarding new employees. We knew we had a long road ahead and a lot of room to grow.

We started our first major analysis initiative in the 4th quarter of 2011. We had developed our analysis services internally, primarily by utilizing two Programmer/Analysts who were very adept at the Analyst role. We had invested in learning process modeling, including BPMN, and the rules-based approach, primarily by reading material by Ron Ross and articles on BRCommunity.com. We had invested in gearing up for the initiative and in understanding different analysis concepts at a conceptual level. However, with recent adoption of scrum, Enterprise Architecture, and formal analysis in a short window, we certainly had not developed a thorough understanding of what we were getting into and we made our fair share of rookie mistakes along the way.

Our first major analysis initiative involved creating a new product using predictive analytics in a new state, using a new architectural approach. We had aimed for a smaller project to build some bench strength, but we could not find a suitable smaller project that would benefit from analysis in the same way. We knew we needed good analysis for a large transformational project so we made the decision to put our skills to the test on one of our largest initiatives. We contained scope by limiting formal analysis to one part of the process, premium calculation, and built in time for learning to make the initiative manageable.

Nevertheless, this was an enormous undertaking and we were immediately challenged to make sure we were putting our resources in the most important places. We made several bad assumptions, mostly under the guise of trying to be efficient. Ultimately, these assumptions slowed us down. We assumed that since we were focusing on a single task in a process, premium calculation, that we could avoid modeling the high-level processes. Why spend valuable resource modeling when we're only working on a small portion and we know what that task is? We drafted business rules so that we could identify concepts that needed to be defined. We assumed that we all spoke pretty much the same language, so term definition was seen more as a task to satisfy completeness rather than as something that provided a lot of business value. We assumed that logical data models were the right artifacts for business people to understand our term relationships and so we left the verbs out. Luckily we recognized that we'd made some bad assumptions early enough to correct them.

The assumption that people speak pretty much the same language is fairly common. It can be difficult to get recognition and support for an issue that many believe isn't a very big deal. We've used the following test, in multiple scenarios, and have yet to find a case where everyone had a common understanding. It has even held true in several rooms full of insurance professionals where there is a vested interest in a common understanding.

Without reading ahead, take a minute and write down your definition of a vehicle.

Now, for each of the pictures below, decide if it should be considered a vehicle according to your definition.

In a room full of people, it's amazing to view the different perceptions for each of these possible instances. For reference, when we originally defined this for our underwriting domain, our working definition of vehicle was "a manufactured carrier of goods or passengers." This definition allows for all instances except horses. This makes a lot of sense considering the risks we assess for automobile insurance. However, even within our own organization, our claims domain could challenge this view. What about the horse and buggy that was involved in that accident? Isn't that a vehicle? For others a motorized vehicle and vehicle are synonymous. How can a skateboard be a vehicle? It's not self-propelled?

For many, this is a real eye opener into the reality that we walk around with different perceptions of common terms. Imagine the confusion when one realizes, half way through a meeting, that the speaker has an entirely different definition of a concept. You immediately start back tracking and trying to figure out what definition the speaker has been using. Now magnify that by a room full of participants and consider how much of the conversation has been lost while everyone tries to backtrack. How many conversations or debates have you been involved in that dragged on and on and could have been completely avoided if we had started with a shared understanding of important concepts? If we want to get to a point of knowledge faster, we have to start with a shared understanding of the concepts we will be discussing, and the assumption that we all have a good shared understanding to begin with just doesn't hold up. This is especially true for abstract concepts and when applied to any large group of people from different knowledge areas.

We learned several things the hard way once we started our analysis initiative.

We learned that we had multiple important base concepts that had different meanings to different people. For example, to some people 'endorsement' meant a form attached to a policy to provide additional coverage, and to others it meant a change to a policy, such as adding the new vehicle you just bought. 'Line of business' was even more confusing. Some defined it simply as a separation between personal and commercial business. Others defined it as synonymous with products, such as auto, homeowners, or umbrella. There was also an accounting and reporting definition that could include products, but also other distinctions like the difference between auto physical damage and auto liability damage across numerous products. The different possible meanings of these very common terms was eye opening.

We also struggled with writing business rules. It was easy to jot something down initially, but once we started integrating rules that were written by different people, the language wasn't consistent. It started to take longer to determine how to phrase the rules. Rules had to be reworked, and the process became slower as more and more rules were added. We weren't realizing the traceability benefits that were intended by this approach.

Our logical data models were another pain point. Business people had a hard time looking at these boxes and symbols and drawing the intended knowledge from them. We found that we were explaining the models a lot, knowledge wasn't being retained as intended, and a business analyst or developer was required to interpret the models whenever we wanted to use them.

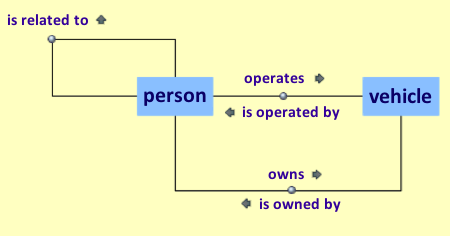

Our early struggles helped us realize that we needed to improve. Ultimately, we discovered fact modeling was the missing foundational piece of our process. Fact modeling — or concept modeling — is a way to create structured vocabulary and visualize core concepts. The following shows a very simple expression of fact types using a fact model.

Figure 1. A Fact Model, showing the simple expression of Fact Types.

We discovered the power of fact modeling as part of rules training with Ron Ross and Gladys Lam. We were discussing some of the struggles we were having, and Ron asked what our fact models looked like. We sheepishly answered that we didn't have any. We knew what they were, but with all the new analysis techniques we had incorporated, we had brushed off the fact modeling as something we had hoped to avoid to save time. Once we started developing some basic fact models with Ron and Gladys, we realized how foundational the fact models were to everything we were trying to accomplish. These models would ultimately save us time, and a lot of frustration.

Once we started using fact models we had several important revelations. Our business people loved the models for two reasons.

The models used many verbs, which proved to be crucial in accurately showing relationships between concepts. The fact types were also much more natural to talk about. The fact models provided the crucial element of allowing the business people to use their natural language while also providing the structure necessary for speaking explicitly. It was a huge improvement to be able to look at a model organized around central concepts and see the important associated concepts and relationships.

Our other revelation was that expressing concepts around multiplicity and uniqueness was a lot more natural when we simply discussed them with our business people as business rules. For example, we would capture the rules that "a vehicle must be owned by at least one party" and "a vehicle must be identified using a vehicle identification number." We could use the business rules as a basis to create logical and physical data models, but we'd reserve those models for our technical people and let our business people see the business rules.

Fact modeling has its own set of challenges as well. We knew we wanted the advantages of fact modeling, but we had to accept a learning curve in order to realize these benefits.

One of the issues we experienced was determining the right people that needed to be involved in fact modeling. We decided to create fact models at the domain level to start with. We discovered that some of our subject matter experts were very good when it came to discussing processes or systems. However, when we tried to utilize them for these more abstract sessions — where we needed to know what things are and discuss concepts devoid of any system constraints we had that could lead to incorrect understandings — this wasn't as easy for some of our business people. It took some time to find the right people.

We also spent a fair amount of time discussing how we could speed the process up. The process was very iterative. We'd start producing a model and discussing it and realize that all of our concepts weren't holding up. Some models took multiple iterations before we finally landed in a spot where it made sense to all participants and held up to some scrutiny. There was some initial assumption that if we couldn't move through all the models quickly we must be doing something wrong. Without the necessary experience, our assumptions about time and effort were simply unrealistic. We had started with some basic abstract concepts, and the abstract concepts proved some of the most difficult to gain agreement on. If you can't see and touch something, it's very easy for a group to picture something different.

Lastly, we struggled with some of our own language that was unique, differing from the industry or world definitions. We discovered areas where we had unique concepts without any corresponding business value for being unique. We had drifted into unique interpretations over time and these concepts took longer to resolve.

Several items were instrumental in keeping support for fact modeling alive, despite our initial learning curve and the date pressure of a major initiative. Most importantly, we had top level sponsorship. Our executive sponsor understood the value of the business rules approach, understood how fact modeling was instrumental in delivering this approach, and knew there would be some learning curve in adopting new techniques. A clear description of our vision and some honesty about what it would take to learn new skills were vital in obtaining this sponsorship.

After some early struggles, we were able to get the right group of people together for some sustained sessions. With the right group and adequate time to delve into some sustained conversations, we accelerated our progress. The right group of people included an individual that wasn't as familiar with our MMG vocabulary, and that perspective helped us identify concepts that were not aligned with common understandings.

The last crucial piece was that we consistently hammered the understanding that fact modeling is a one-time investment. It is creating a foundation that will stand up over time, and this foundation is critical to all the other analysis that will be built on top of it. We consistently reinforced that this solid foundation would improve the other aspects of analysis that we had been struggling with before engaging in fact modeling.

In the end, fact modeling did provide the solid foundation we had been missing and improved other areas of analysis. Some terms were defined by the model itself, such as roles and objectifications. We were also able to gain some valuable term insight through visualization. For example, if we were viewing how a concept related to other concepts, it became easier to tell when a concept may not have been defined consistently. Differences in kinds of things versus the actual things themselves — or inventory versus reality — became significantly easier to discuss. We found that these were important differences we had commonly overlooked.

Most importantly, we were able to agree on a common language that went all the way from our business people to our physical implementation. Data modelers didn't have to worry about what to name things, and developers didn't debate what to call concepts in code. When these concepts needed to be discussed, the conversations went much smoother since participants all used the same language. There was much less explaining to do about what you meant.

Business rules improved dramatically. They were faster to write since the language had its basis in fact types. This removed the debate about how to phrase things. The rules were much clearer since they were based on fact types and defined concepts. We were also able to realize the traceability we had been looking for. Since the business rules were now based on a consistent set of fact types, we could trace usage of concepts and fact types through all of our analysis artifacts.

Our logical data models ultimately took on much less importance, and therefore, we were able to avoid some work in this area. The models provided little value to our business users. Our data modelers were happy with a solid fact model and business rules to explain important constraints so in most cases logical data modeling was not necessary.

MMG uses an agile development methodology so we firmly believe in retrospectives. We had many important retrospective realizations along the way and three project-level realizations that now guide our development efforts. Before starting any large development project that will utilize analysis we make sure that core concepts are defined, core concepts are fact modeled, and high-level processes are modeled.

When we look back at our major analysis initiative, fact modeling provided the basis to help us evolve and overcome some of the obstacles that had plagued us in the past. It helped us speak a common language. Corporate memory was improved, and we had a single source of the truth for our new rating product. Traceability was vastly improved. We had a solid foundation that had helped us complete our analysis for this initiative and that could be reused in future initiatives.

We also had one unexpected benefit. We were able to gain conceptual insight from our concept models that helped us understand how we were coupling concepts together that could be expressed independently. Ultimately, our concept model pointed to needed process improvements. We realized we could be breaking decisions apart into smaller, more reusable components. We gained very valuable insights through our fact modeling experience that helped improve numerous areas of our business.

The benefits of our fact modeling approach were ultimately confirmed in two ways. First, the implementation of our new rating product had less than 10 bugs reported prerelease and zero bugs reported post release, which covered 8000 complex lines of code. This was a big analysis win compared to some previous experiences. The second confirmation came from the opening lines of an email from one of our business architects. It started, "I was looking through the business rules and noticed…." Our business architect was able to sit down at her own computer, open our general rulebook, and gain insight independently. This was a vast improvement from our days when a team of developers would have needed to assemble to gather up the business rules for examination. It demonstrated in a very simple manner how far we had evolved over the course of this process. These two simple confirmations helped reaffirm the benefits we realized and provided the necessary fuel to make sure these new techniques are fully supported in our future initiatives.

# # #

About our Contributor:

Online Interactive Training Series

In response to a great many requests, Business Rule Solutions now offers at-a-distance learning options. No travel, no backlogs, no hassles. Same great instructors, but with schedules, content and pricing designed to meet the special needs of busy professionals.