What Is XAI? Why and How Transparency Increases the Success of AI Solutions

Artificial Intelligence (AI) is increasingly popular in business initiatives in the healthcare and financial services industries, amongst many others, as well as in corporate business functions such as finance and sales. Did you know that startups in AI raise more money than those outside of AI? And that within the next decade, every individual is expected to interact with AI-based technology on a daily basis?

AI is a technology trend related to any development to automate or optimize tasks that have traditionally required human intelligence. Experts in the industry prefer to nuance this broad definition of AI by distinguishing machine learning, statistics, IT and rule-based systems. The availability of huge amounts of data and more processing power — not major technological innovations — make machine learning for better predictions the most popular technique today. However, I will argue that other AI techniques are equally important.

The consequences of AI innovations for humanity have been huge and were, at the time, difficult to oversee. There were pioneers, visionaries, investments, and failures needed to get us to where we are today. I am so grateful with the results. Every day I use a computer, a smart phone, and other technology to provide me travel advice, ways to socialize, recommendations on what to do or buy, and other new knowledge. Many of these innovations are related to technology developed by researchers in Artificial Intelligence, and the full potential has not yet been realized.

But there are also concerns

Artificial intelligence solutions are accepted to be a black box: they provide answers without an explanation, like an oracle. You may already have seen the results in our society: AI is said to be biased; governments raise concerns about the ethical consequences of AI, and regulators require more transparency.

We should be embracing the potential improvements that AI can bring to improve human decision-making in companies, but instead, people have become skeptical about AI technology — not only because they fear for losing their jobs but also because, as the experts, they are aware of all the uncertainties that surround their work. How can an AI algorithm deal with these aspects?

Examples of AI biases

AI systems have been demonstrated to be prejudiced based on gender (promoting males for job offers) and biased based on ethnicity (classifying pictures of black people as gorillas). These biases are a result of the data used to train the algorithms — containing fewer female job seekers and more pictures of non-colored people. Let's not forget that this data is created and selected by humans who are biased themselves.

Perhaps you need to make choices and guide your company to compete using AI. What approach could you follow without losing the trust of your own employees or customers?

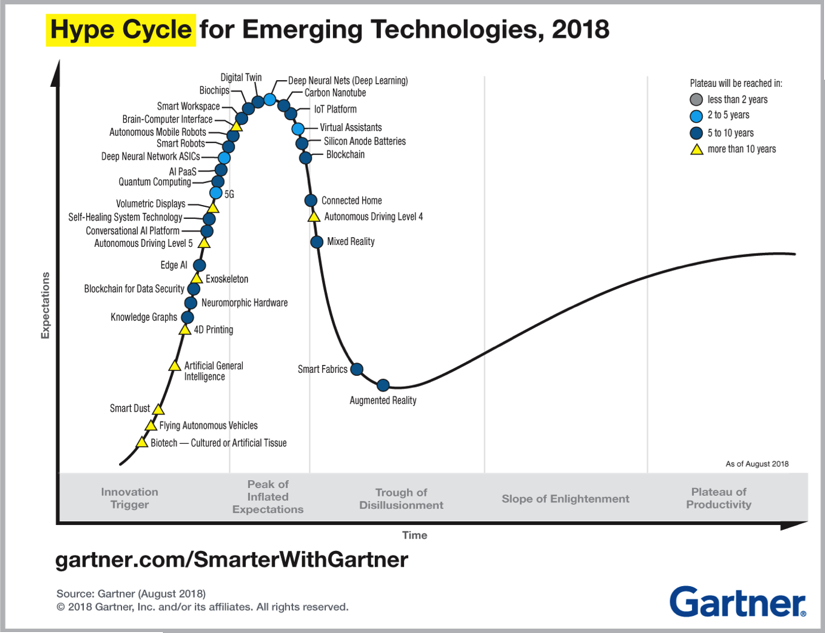

Figure 1. Hype Cycle for Emerging Technologies.

Now that AI technology is at the peak in the hype cycle for emerging technologies (see Figure 1), more conservative businesses want to use the benefits of AI-based solutions in their operations. However, they require an answer to some or all of these above mentioned concerns.

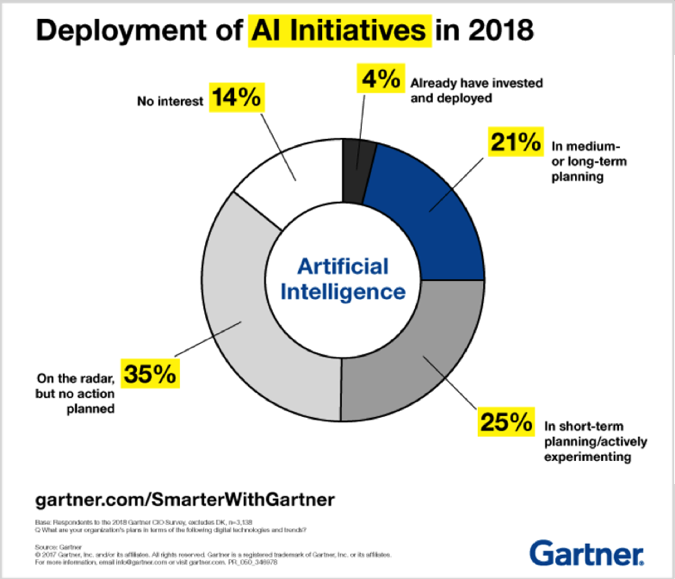

Figure 2. Deployment of AI Initiatives in 2018.

To benefit from the potential of AI, the resulting decisions must be explainable. For me this is a no-brainer since I have been promoting transparency in decision-making for years using rule-based technology. In my vision, a decision-support system needs to be integrated in the value cycle of an organization. Business stakeholders should feel responsible for the knowledge and behavior of the system and confident of its outcome. This may sound logical and easy, but everyone with experience in the corporate world knows it is not. The gap between business and IT is filled with misunderstandings, as well as differences in presentation and expectations.

It takes two to tango. The business — represented by subject matter experts, policy makers, managers, executives, and sometimes external stakeholders or operations — should take responsibility using knowledge representations they understand, and IT should create integrated systems directly related to the policies, values, and KPIs of a business. Generating explanations for decisions plays a crucial role. We should do the same for AI-based decisions: Choose AI technology when needed, and use explanations to make it a success; that is, explainable AI — known by the acronym 'XAI'.

Five reasons why XAI solutions are more successful

Five reasons why XAI solutions are more successful than an 'oracle' based on AI (or any black box IT system) are as follows:

- Decision support systems that explain themselves have a better return on investment because explanations close the feedback loop between strategy and operations, resulting in timely adaptation to changes, longer system lifetime, and better integration with business values.

- Offering explanations enhances stakeholder trust because the decisions are credible for your customer and make your business accountable towards regulators.

- Decisions with explanations become better decisions because the explanations show (unwanted) biases and help to include missing, commonsense knowledge.

- It is feasible to implement AI solutions that generate explanations without a huge drop in performance with the six-step method that I developed[1] and technology expected from increased research activity.

- It is preparation for the increased demand for transparency based on concerns about the ethics of AI and the effect for the fundaments of a democratic society.

In my upcoming book (available on Amazon) entitled "AIX: Artificial Intelligence needs eXplanation," I detail each of the above reasons, with examples and practical guidance. This will provide you with a good understanding of what it takes to explain solutions that support or automate a decision task and the value that explanations add to your organization.

References

[1] Silvie Spreeuwenberg, "What is XAI? How to Apply XAI," Business Rules Journal, Vol. 20, No. 7, (Jul. 2019) URL: http://www.brcommunity.com/a2019/c002.html

# # #

About our Contributor:

Online Interactive Training Series

In response to a great many requests, Business Rule Solutions now offers at-a-distance learning options. No travel, no backlogs, no hassles. Same great instructors, but with schedules, content and pricing designed to meet the special needs of busy professionals.

How to Define Business Terms in Plain English: A Primer

How to Use DecisionSpeak™ and Question Charts (Q-Charts™)

Decision Tables - A Primer: How to Use TableSpeak™

Tabulation of Lists in RuleSpeak®: A Primer - Using "The Following" Clause

Business Agility Manifesto

Business Rules Manifesto

Business Motivation Model

Decision Vocabulary

[Download]

[Download]

Semantics of Business Vocabulary and Business Rules